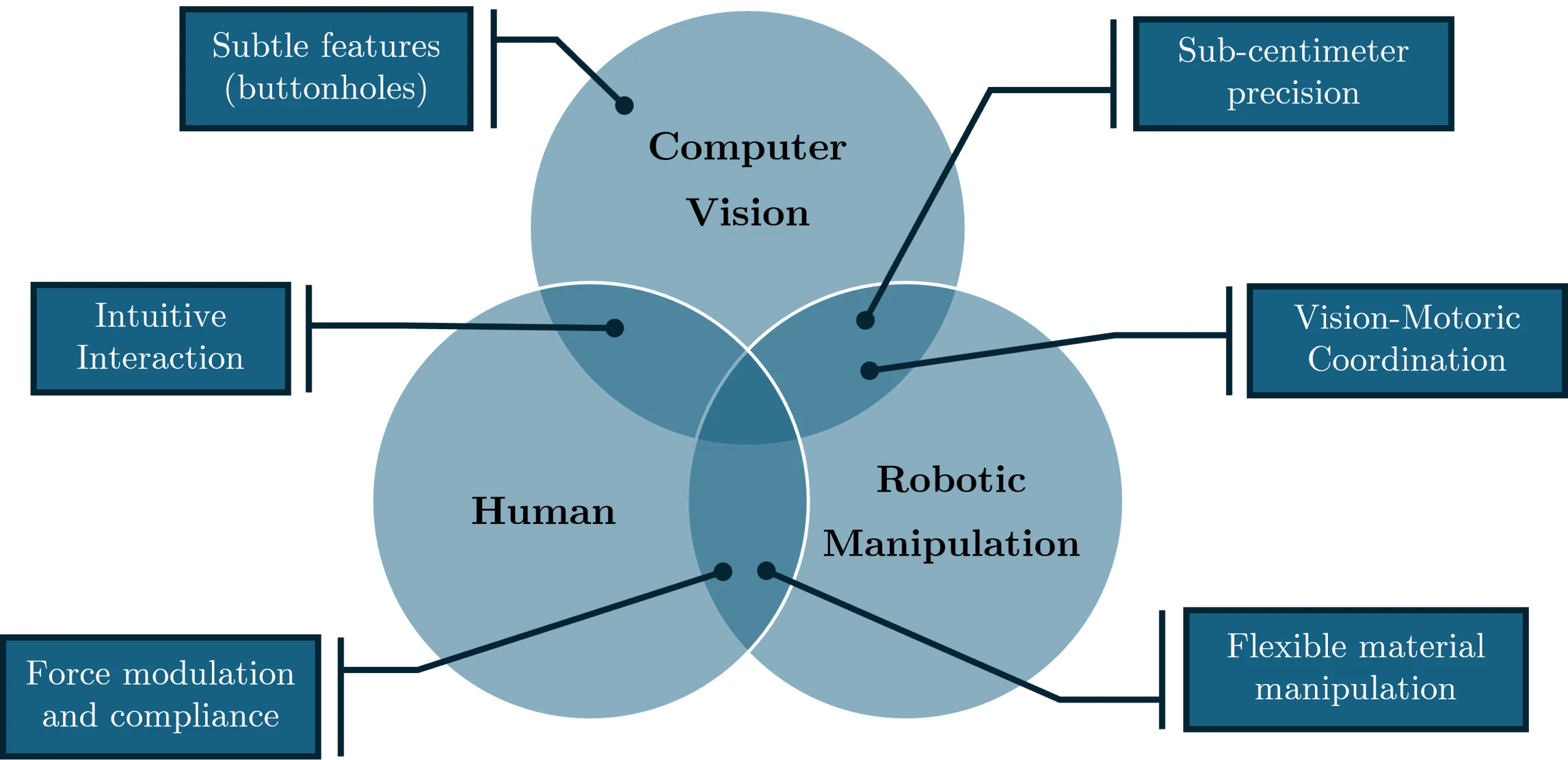

Vision-Guided Robotic Manipulation for Button Fastening

Project Overview

Master's degree research developing a dual-arm robot system with LiDAR-based perception for precision fastening tasks using deep learning and path-planning algorithms.

Skills Used

Overview

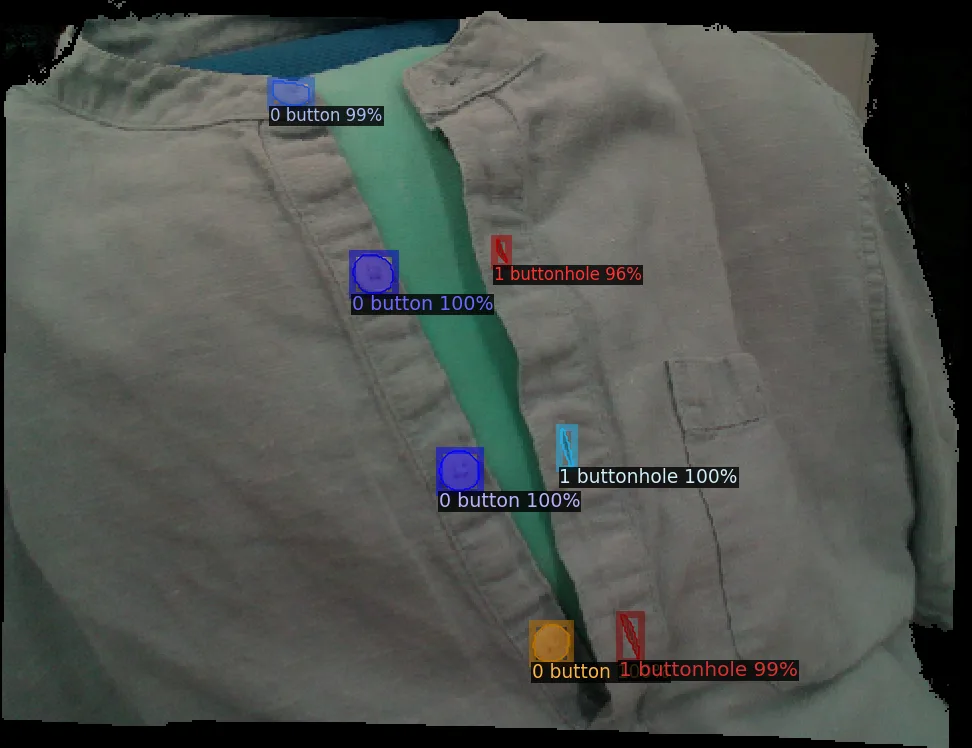

Integrated a dual-arm manipulator equipped with a LiDAR camera for precision fastening operations. Used deep-learning–based instance segmentation with Detectron2 for object recognition and developed path-planning algorithms for coordinated dual-arm motion under ROS2.

Presented Challenges

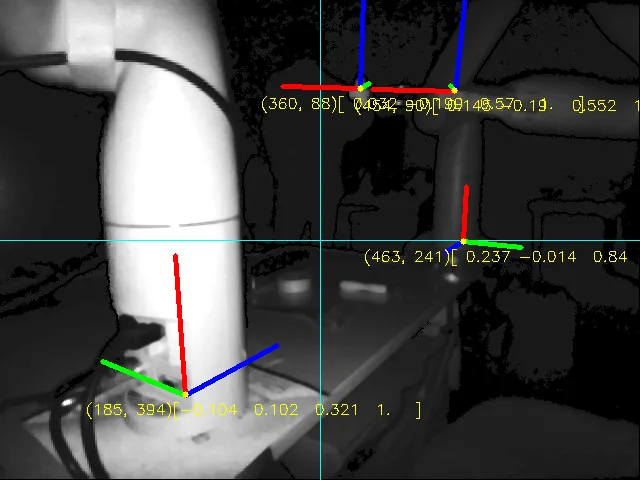

In-place LiDAR-to-Workspace Calibration

Performed direct calibration of the LiDAR sensor to the robot’s workspace, ensuring accurate 3D localization of objects.

Perception Pipeline

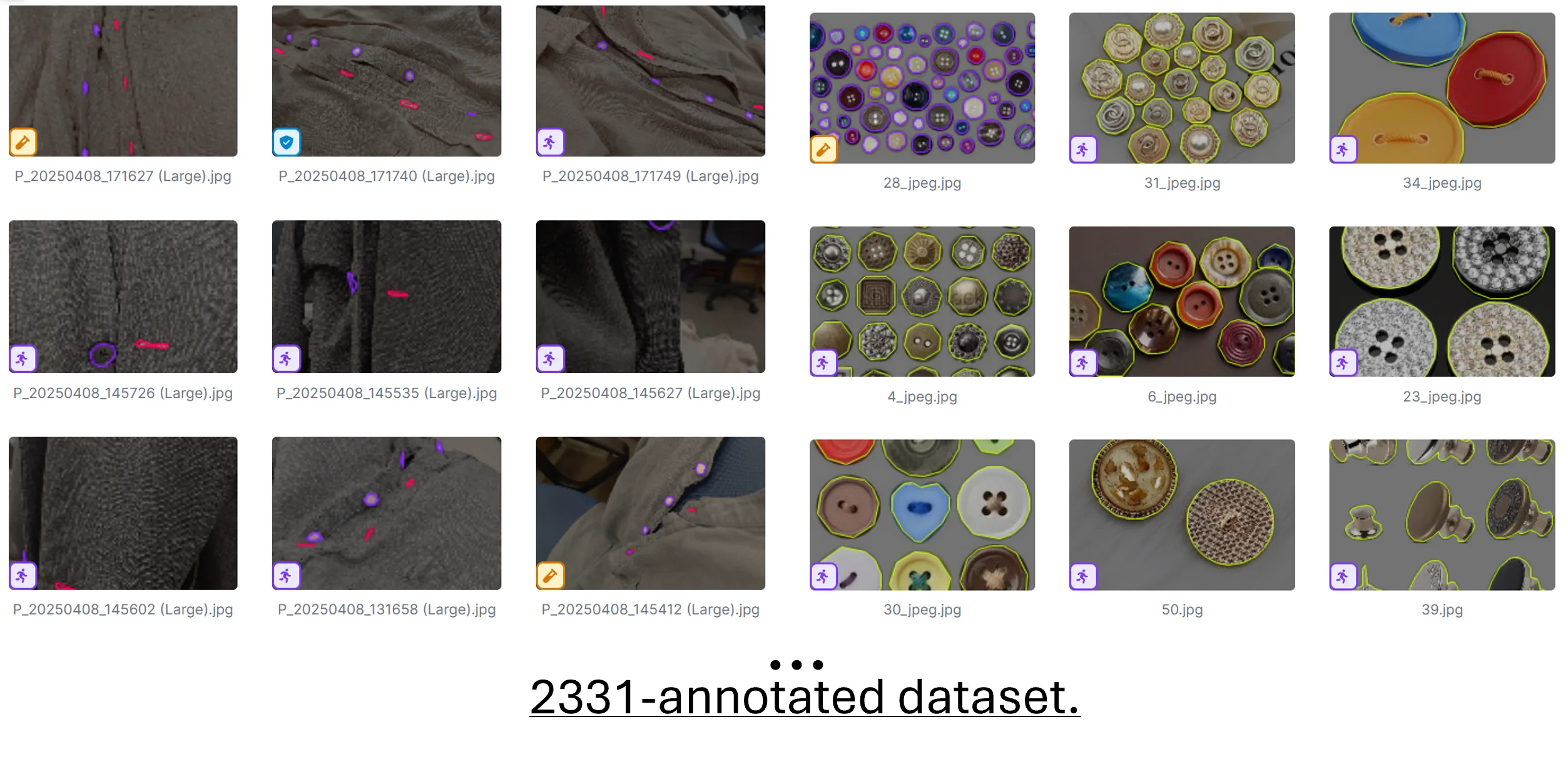

- Instance segmentation using Detectron2 (Mask R-CNN FPN) trained on a custom dataset of 2,331 annotated images

- Additional instance-tracking module for robust object association across frames

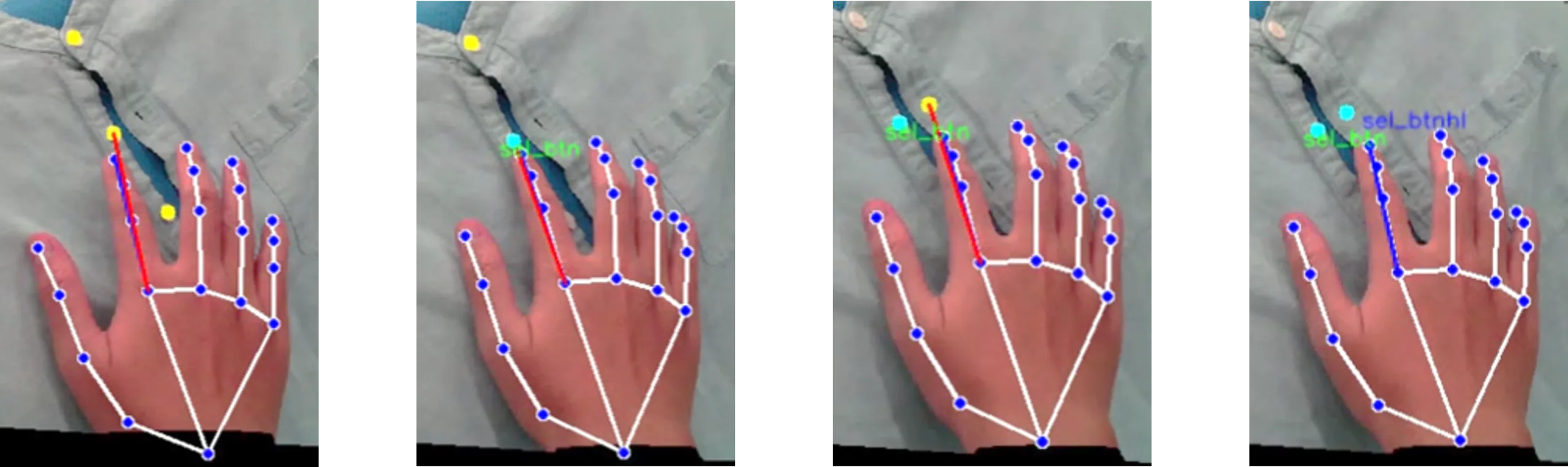

Human Interaction: Point and Select

Users can select real-world objects by physically pointing at them. The system interprets the pointing gesture as an instruction to select a specific target object.

Full System Demonstration

This description is written with help of AI tools, but the project IS NOT. You can verify the source codes via the provided links where available.